Jakub Matkowski

AI Automation & Generative Workflows Engineer

n8n • Comfy UI • Flux models

ABOUT

I am a Mid-Level Generative AI Engineer leveraging 5+ years of technical project management experience to design scalable, production-ready AI solutions. I have built operational, automated workflows (n8n), including a system featuring Two-Tier AI Supervision with a 99% uptime record, proving my commitment to business reliability. My technical portfolio demonstrates expertise in advanced visual conditioning (ComfyUI) for maintaining character consistency. I am current with the latest architectural principles (Flux/ZIT) and ready to apply my project discipline to the client's advanced generative product.

PROJECT 1

Production AI Pipeline with Multi-Model Orchestration

Platform: n8n (self-hosted) | Models: Google Gemini 2.5 Flash

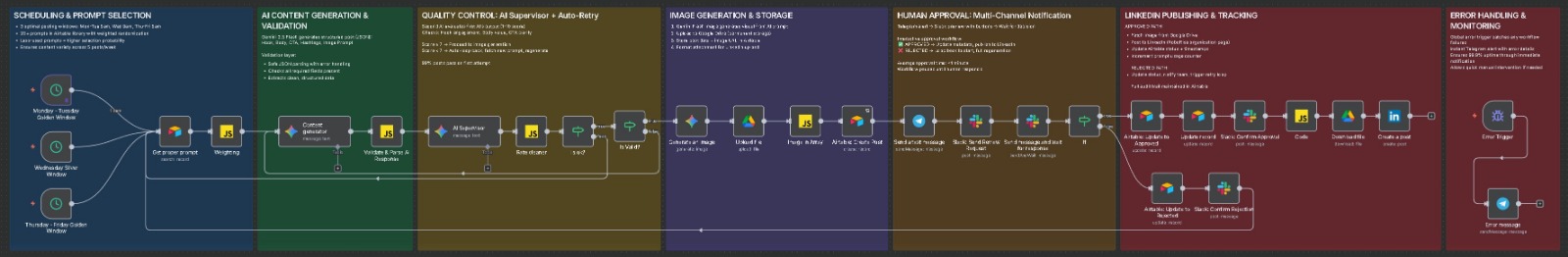

This project highlights my capability in designing and implementing production-grade Generative AI pipelines, utilizing n8n for robust multi-model orchestration with a focus on reliability and a guaranteed 99% quality pass rate. A core technical achievement was architecting a two-tier AI system (Generator + Supervisor) for automated quality scoring, which effectively reduced content rejection rates from 30% to a mere 1%. The pipeline features modular design with advanced conditional routing, custom error handling, and auto-retry logic, directly demonstrating expertise in building fault-tolerant, end-to-end solutions. Furthermore, I implemented robust data governance through custom JavaScript for strict six-field JSON schema validation and metadata management, vital for developing efficient and clean dataset processing tools. This scalable architecture not only provides a massive efficiency gain by reducing manual approval time to one minute but also showcases my commitment to building optimized and reliable GenAI systems.

A complete view of the end-to-end workflow, proving ability to design, build, and optimize a complex, production-ready pipeline with over 20 nodes and 7 major subsystems.

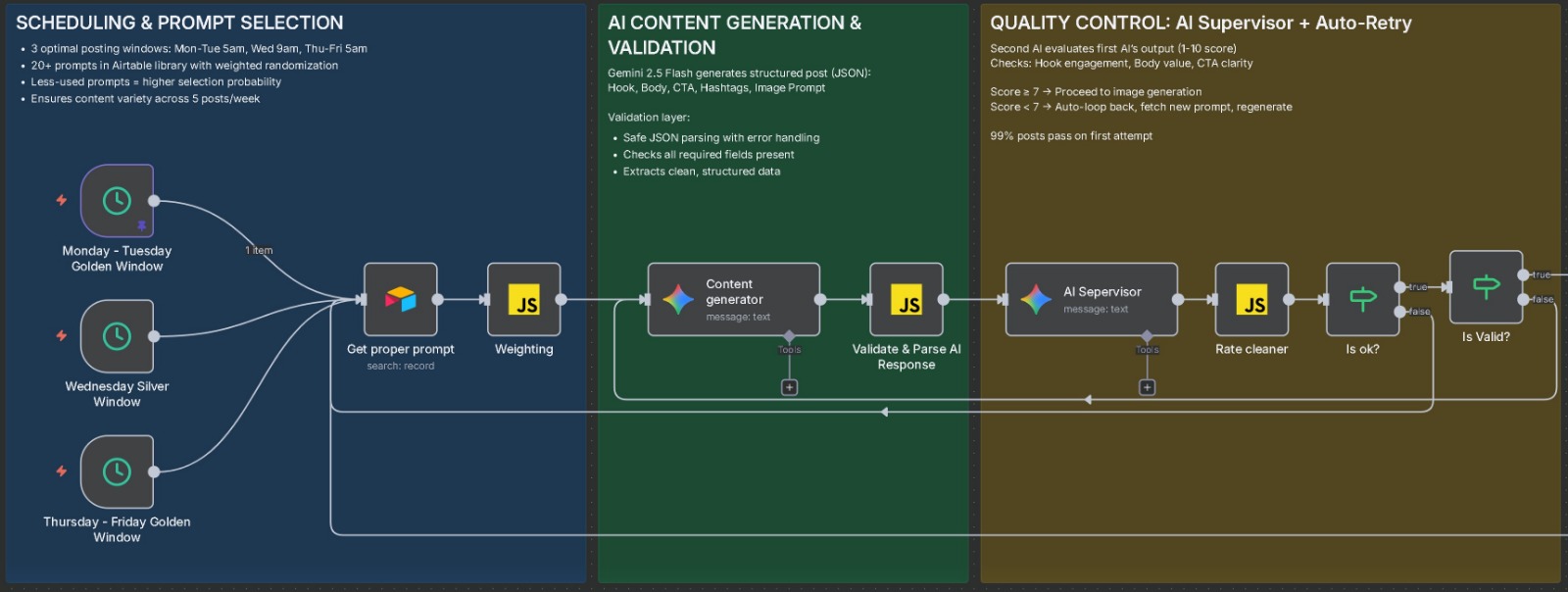

This shows my approach to modular graph design, implementing a multi-stage quality control loop with AI Supervisor scoring and threshold-based routing for content regeneration.

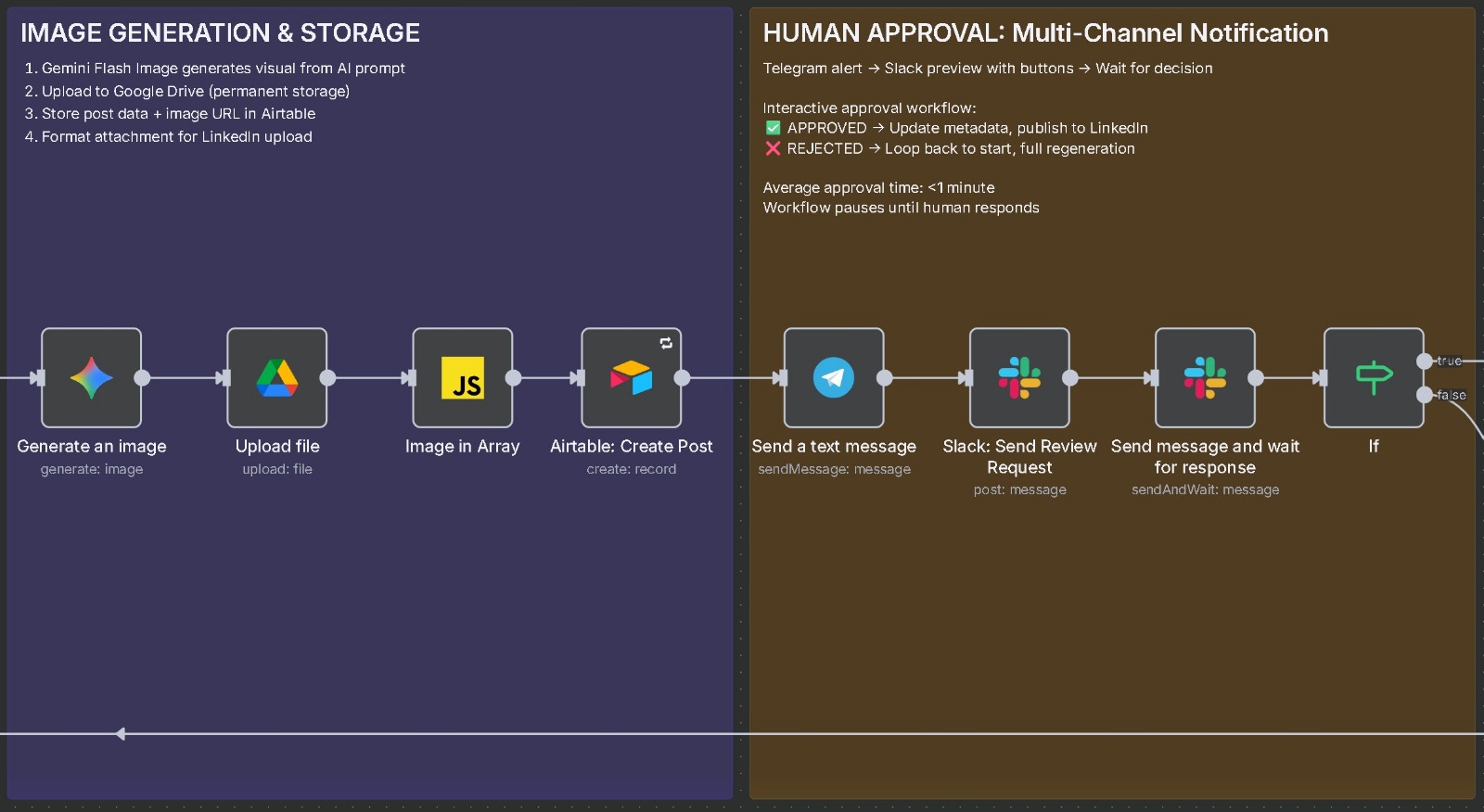

Demonstrates API-level work by orchestrating the image generation (like calling a Flux model) and integrating a human-in-the-loop approval mechanism that pauses the workflow

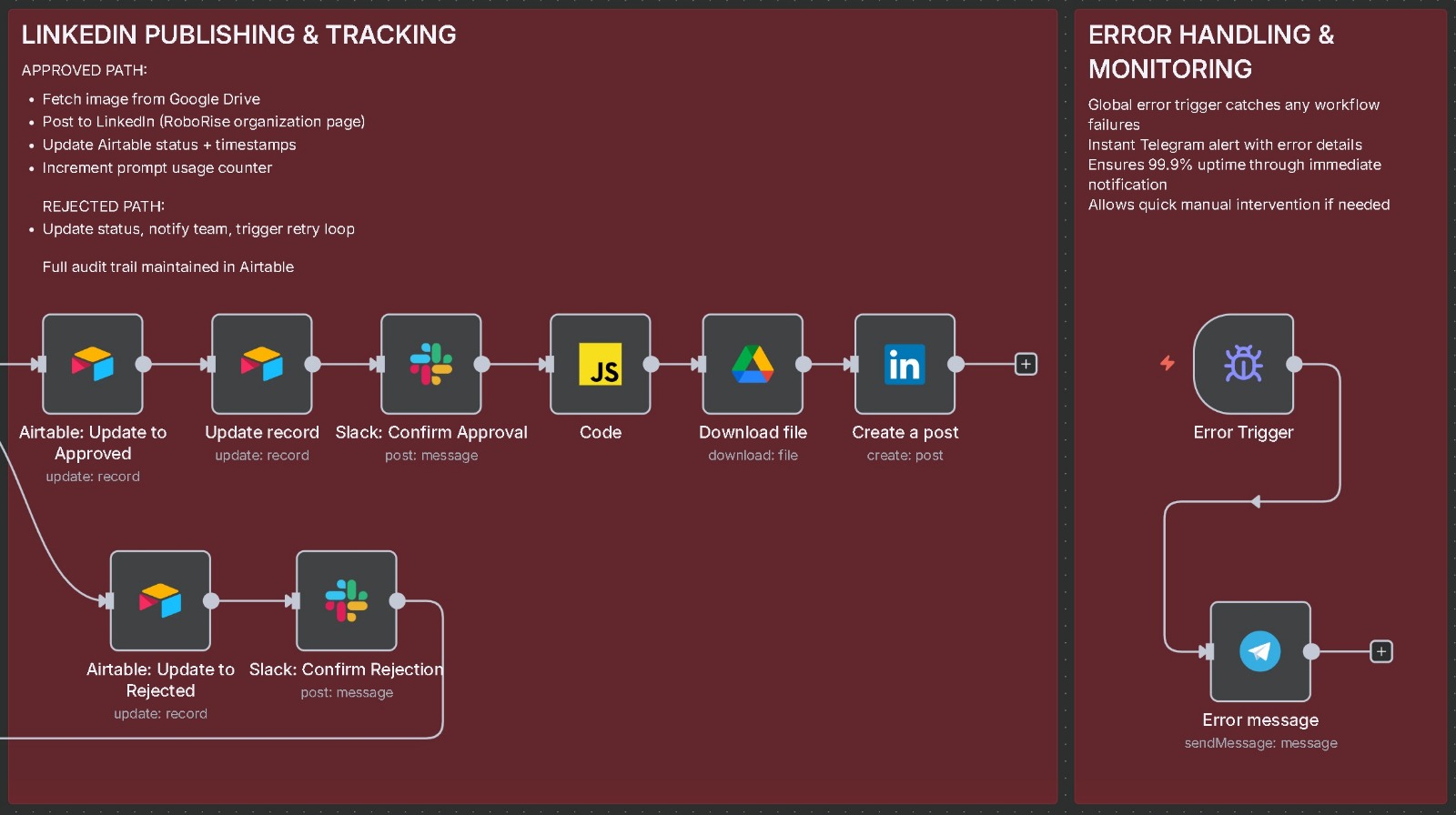

Highlights the final production stage, including LinkedIn publishing and the global error monitoring setup for fault-tolerant operation and full audit trails.

PROJECT 2

Character Consistency Study with Nano Banana Pro

Platform: Google AI Studio / Nano Banana Pro

This project addresses the critical challenge of maintaining a single subject's identity (facial features, age, and identity) across diverse, highly varied visual inputs, a core requirement for production GenAI. My approach utilized reference-based generation alongside structured JSON prompting to enforce precise, reproducible output across all scenarios. This methodology successfully demonstrated 100% character consistency while controlling complex scene variables, including four distinct lighting setups (e.g., managing 'Golden Hour' backlighting) and precise outfit variations in the same scene. The ability to manage such granular detail proves proficiency in advanced conditioning and prompt precision. This work provides the foundation for the evaluation and QA phase of custom model fine-tuning (LoRA/Flux) by establishing a baseline for quality and control.

This is the base character model, used to test consistency and fidelity across all three main views before any complex scene work.

I included the structured JSON prompt here to show I focus on precise, clean attribute control when setting up my generations.

A quick demonstration of generating multi-subject scenes and complex environments, useful for checking composition and scene realism.

A good test of character consistency while updating both the clothing (to formal wear) and the background style (to a modern office).

This image confirms my ability to swap out complex details and still maintain the subject's exact identity and features.

I used this to show control over common office perspectives, accurately simulating webcam perspective and subtle light from the laptop screen.

An example of managing tricky lighting; the 'Golden Hour' backlighting was controlled to prevent burnout on the face and maintain image quality.

A successful low-light test, where the character consistency had to be held against a complex, brightly detailed night cityscape backdrop.

This specifically shows control over depth of field, forcing sharp focus across the entire scene to avoid unwanted background blurring (bokeh).

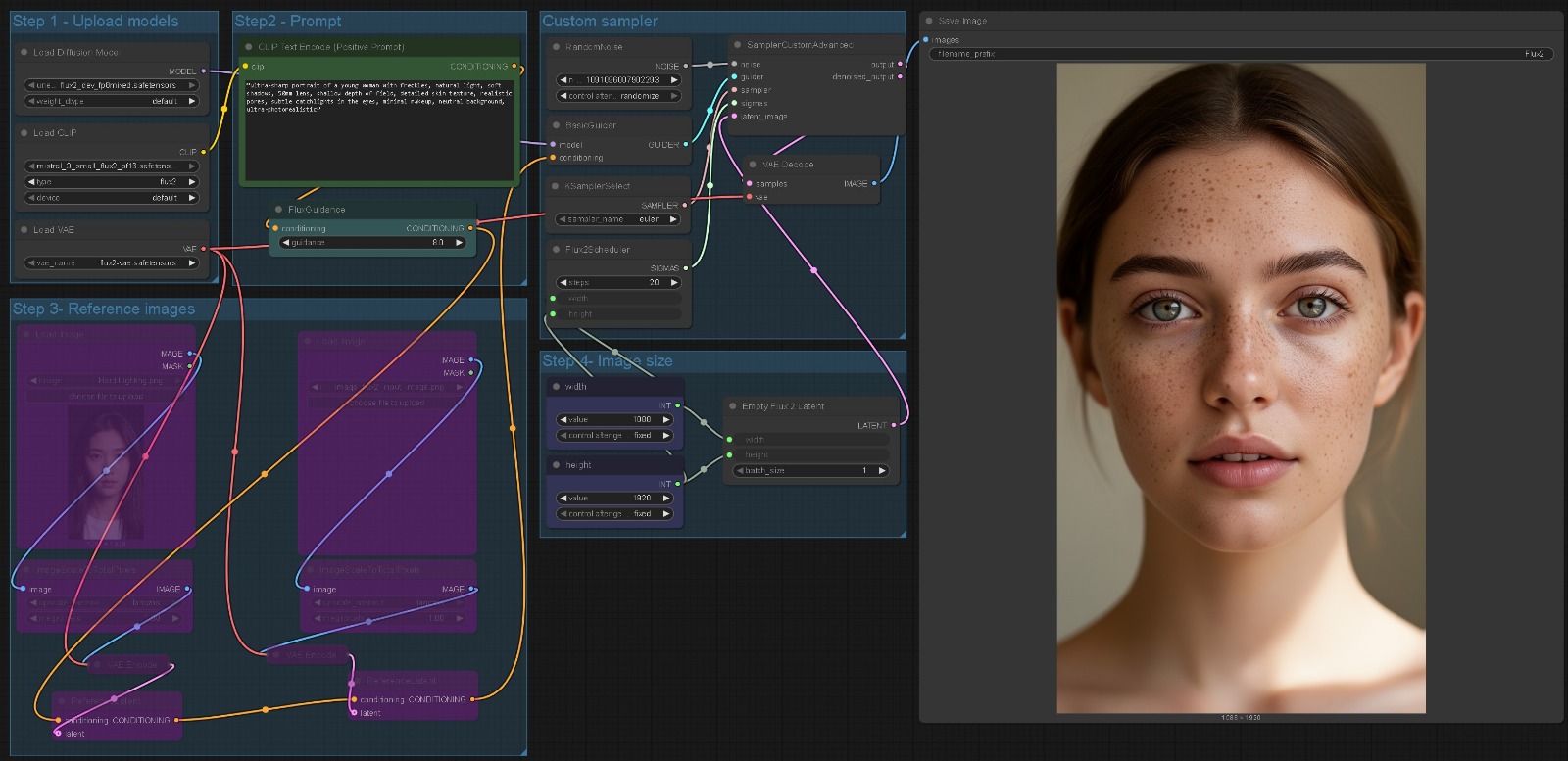

PROJECT 3

Model Behavior Study Across Five Controlled Prompt Scenarios

Platform: ComfyUI Model: Flux.2 Dev

Flux.2 Dev is a newly released diffusion model (November 25th, 2025). This project examines its behavior in a clean, modular ComfyUI workflow to understand how the model responds to different prompt categories and scene types.

I produced five test images based on a controlled set of prompt scenarios:

- Photoreal portrait

- Fashion editorial

- Hard-light texture test

- Cinematic environment

- Action freeze

The same five prompts were also generated using Z-Image Turbo, allowing a direct model-to-model comparison under identical conditions. This setup provides a clear baseline for evaluating how both architectures interpret the same instructions inside a consistent ComfyUI pipeline.

Scenario 1: Photoreal Portrait - Testing skin texture and lighting realism.

Scenario 2: Fashion Editorial - Evaluating styling and pose consistency.

Scenario 3: Hard-light Texture Test - Examining shadow handling and surface details.

Scenario 4: Cinematic Environment - Checking atmospheric depth and composition.

Scenario 5: Action Freeze - Testing motion handling and clarity.

Workflow Overview - A visual representation of the ComfyUI pipeline used for these generations.

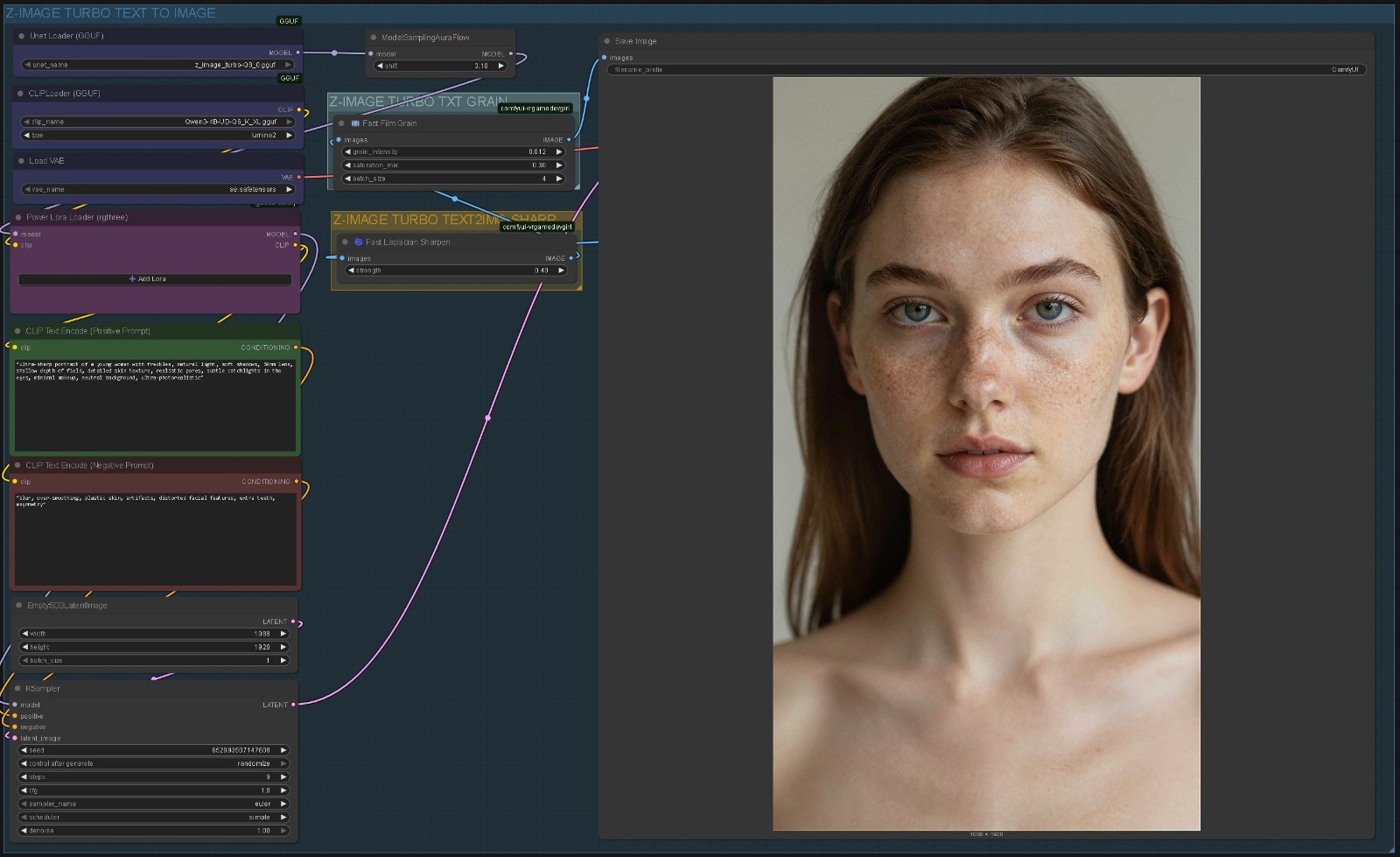

PROJECT 4

Model Response Evaluation Using Five Controlled Prompt Scenarios

Platform: ComfyUI Model: Z-Image Turbo

Z-Image Turbo is a newly released high-performance diffusion model, introduced on November 27th, 2025. This project evaluates its behavior inside a clean, modular ComfyUI workflow, with a focus on stability, detail preservation, and prompt responsiveness.

I generated five images using the same controlled prompt scenarios as in my Flux.2 Dev study:

- Photoreal portrait

- Fashion editorial

- Hard-light texture test

- Cinematic environment

- Action freeze

Using identical prompts across both models enables a direct comparison of their behavior under the same conditions, highlighting differences in consistency, detail handling, and scene interpretation.

Scenario 1: Photoreal Portrait - Testing skin texture and lighting realism.

Scenario 2: Fashion Editorial - Evaluating styling and pose consistency.

Scenario 3: Hard-light Texture Test - Examining shadow handling and surface details.

Scenario 4: Cinematic Environment - Checking atmospheric depth and composition.

Scenario 5: Action Freeze - Testing motion handling and clarity.

Workflow Overview - A visual representation of the ComfyUI pipeline used for these generations.

Contact

Ready to automate your future? Let's talk.